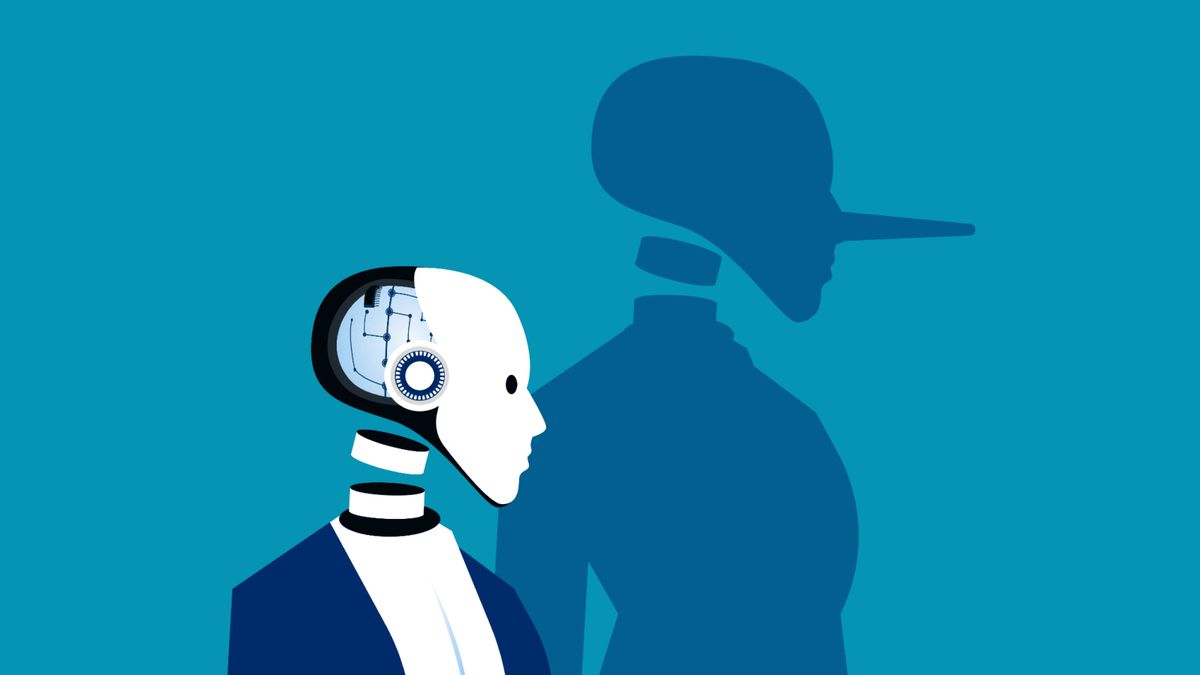

Current approaches to artificial intelligence (AI) are unlikely to create models that can match human intelligence, according to a recent survey of industry experts.

Out of the 475 AI researchers queried for the survey, 76% said the scaling up of large language models (LLMs) was “unlikely” or “very unlikely” to achieve artificial general intelligence (AGI), the hypothetical milestone where machine learning systems can learn as effectively, or better, than humans.

This is a noteworthy dismissal of tech industry predictions that, since the generative AI boom of 2022, has maintained that the current state-of-the-art AI models only need more data, hardware, energy and money to eclipse human intelligence.

Now, as recent model releases appear to stagnate, most of the researchers polled by the Association for the Advancement of Artificial Intelligence believe tech companies have arrived at a dead end — and money won’t get them out of it.

“I think it’s been apparent since soon after the release of GPT-4, the gains from scaling have been incremental and expensive,” Stuart Russell, a computer scientist at the University of California, Berkeley who helped organize the report, told Live Science. “[AI companies] have invested too much already and cannot afford to admit they made a mistake [and] be out of the market for several years when they have to repay the investors who have put in hundreds of billions of dollars. So all they can do is double down.”

Diminishing returns

The startling improvements to LLMs in recent years is partly owed to their underlying transformer architecture. This is a type of deep learning architecture, first created in 2017 by Google scientists, that grows and learns by absorbing training data from human input.

This enables models to generate probabilistic patterns from their neural networks (collections of machine learning algorithms arranged to mimic the way the human brain learns) by feeding them forward when given a prompt, with their answers improving in accuracy with more data.

Related: Scientists design new ‘AGI benchmark’ that indicates whether any future AI model could cause ‘catastrophic harm’

But continued scaling of these models requires eye-watering quantities of money and energy. The generative AI industry raised $56 billion in venture capital globally in 2024 alone, with much of this going into building enormous data center complexes, the carbon emissions of which have tripled since 2018.

Projections also show the finite human-generated data essential for further growth will most likely be exhausted by the end of this decade. Once this has happened, the alternatives will be to begin harvesting private data from users or to feed AI-generated “synthetic” data back into models that could put them at risk of collapsing from errors created after they swallow their own input.

But the limitations of current models are likely not just because they’re resource hungry, the survey experts say, but because of fundamental limitations in their architecture.

“I think the basic problem with current approaches is that they all involve training large feedforward circuits,” Russell said. “Circuits have fundamental limitations as a way to represent concepts. This implies that circuits have to be enormous to represent such concepts even approximately — essentially as a glorified lookup table — which leads to vast data requirements and piecemeal representation with gaps. Which is why, for example, ordinary human players can easily beat the “superhuman” Go programs.”

The future of AI development

All of these bottlenecks have presented major challenges to companies working to boost AI’s performance, causing scores on evaluation benchmarks to plateau and OpenAI’s rumored GPT-5 model to never appear, some of the survey respondents said.

Assumptions that improvements could always be made through scaling were also undercut this year by the Chinese company DeepSeek, which matched the performance of Silicon Valley’s expensive models at a fraction of the cost and power. For these reasons, 79% of the survey’s respondents said perceptions of AI capabilities don’t match reality.

“There are many experts who think this is a bubble,” Russell said. “Particularly when reasonably high-performance models are being given away for free.”

Yet that doesn’t mean progress in AI is dead. Reasoning models — specialized models that dedicate more time and computing power to queries — have been shown to produce more accurate responses than their traditional predecessors.

The pairing of these models with other machine learning systems, especially after they’re distilled down to specialized scales, is an exciting path forward, according to respondents. And DeepSeek’s success points to plenty more room for engineering innovation in how AI systems are designed. The experts also point to probabilistic programming having the potential to build closer to AGI than the current circuit models.

“Industry is placing a big bet that there will be high-value applications of generative AI,” Thomas Dietterich, a professor emeritus of computer science at Oregon State University who contributed to the report, told Live Science. “In the past, big technological advances have required 10 to 20 years to show big returns.”

“Often the first batch of companies fail, so I would not be surprised to see many of today’s GenAI startups failing,” he added. “But it seems likely that some will be wildly successful. I wish I knew which ones.”